Thesis: 3D GANs to Autonomously Generate Building Geometry – Mueller

-

Intro

-

Technical Aspects

Information

| Primary software used | Python |

| Software version | 1.0 |

| Course | Computational Intelligence for Integrated Design |

| Primary subject | AI & ML |

| Secondary subject | Machine Learning |

| Level | Expert |

| Last updated | November 27, 2024 |

| Keywords |

Responsible

| Faculty |

Thesis: 3D GANs to Autonomously Generate Building Geometry – Mueller 0/1

Thesis: 3D GANs to Autonomously Generate Building Geometry – Mueller

3D Generative Adversarial Networks to Autonomously Generate Building Geometry – Lisa-Marie Mueller

Generative Adversarial Networks (GANs) are a class of machine learning models that consist of two neural networks, the generator and the discriminator, which compete against each other in a zero-sum game. The generator’s objective is to create realistic synthetic data that closely resembles the training data, while the discriminator’s goal is to differentiate between real data and the data generated by the generator. During training, these two networks are optimized simultaneously, with the generator improving its ability to create convincing data and the discriminator enhancing its skill at detecting fakes. This adversarial process continues until the generator produces outputs indistinguishable from the real data, resulting in highly realistic synthetic data generation. GANs have been widely used for various applications, including image generation, video synthesis, and even music creation, due to their ability to learn complex data distributions. In architecture, GANs have primarily been applied for 2D applications. In this thesis, the application with 3D data and a 3D output are explored.

The purpose of this thesis is to investigate if the size of the output and the clarity (reduce the noise) of the output of 3D GANs can be increased to create high-quality, detailed building geometry.

The AI model used in this thesis is Generative Adversarial Networks (GAN).

APA: Mueller, L.M. (2023). 3D Generative Adversarial Networks to Autonomously Generate Building Geometry [Master thesis, TU Delft]. https://repository.tudelft.nl/record/uuid:b4a44f69-e3a4-4a29-85c4-77a7e899b81a

Here you can find the repository of the master thesis 3D Generative Adversarial Networks to Autonomously Generate Building Geometry | TU Delft Repository

Project Information

- Title: 3D Generative Adversarial Networks to Autonomously Generate Building Geometry

- Author(s): Lisa-Marie Mueller

- Year: 2023

- Link: https://repository.tudelft.nl/record/uuid:b4a44f69-e3a4-4a29-85c4-77a7e899b81a

- Type: Master thesis, Building Technology

- ML tags: Generative Adversarial Networks, Neural Networks

- Topic tags: Generative Design

Thesis: 3D GANs to Autonomously Generate Building Geometry – Mueller 1/1

Technical Aspectslink copied

Software & Plug-ins

Python using Keras API for Tensor-Flow

Workflow

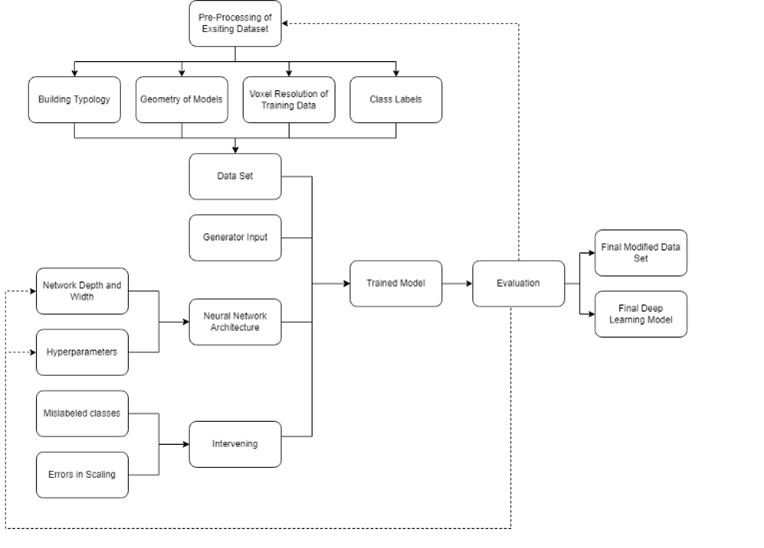

The steps followed in building the model are:

- Selecting a training data set consisting of 3D building models

- Selecting current state-of-the-art 3D GAN methods to test with the training data

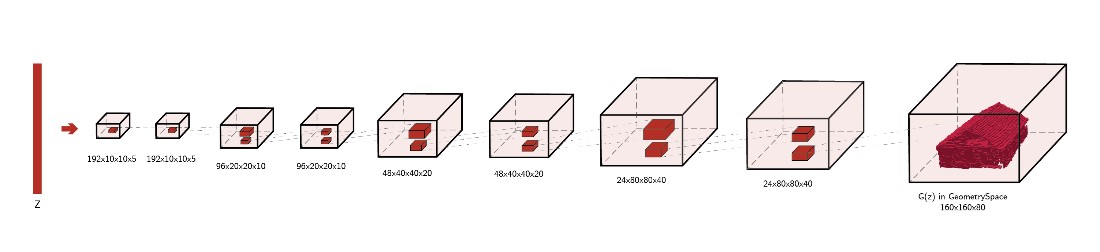

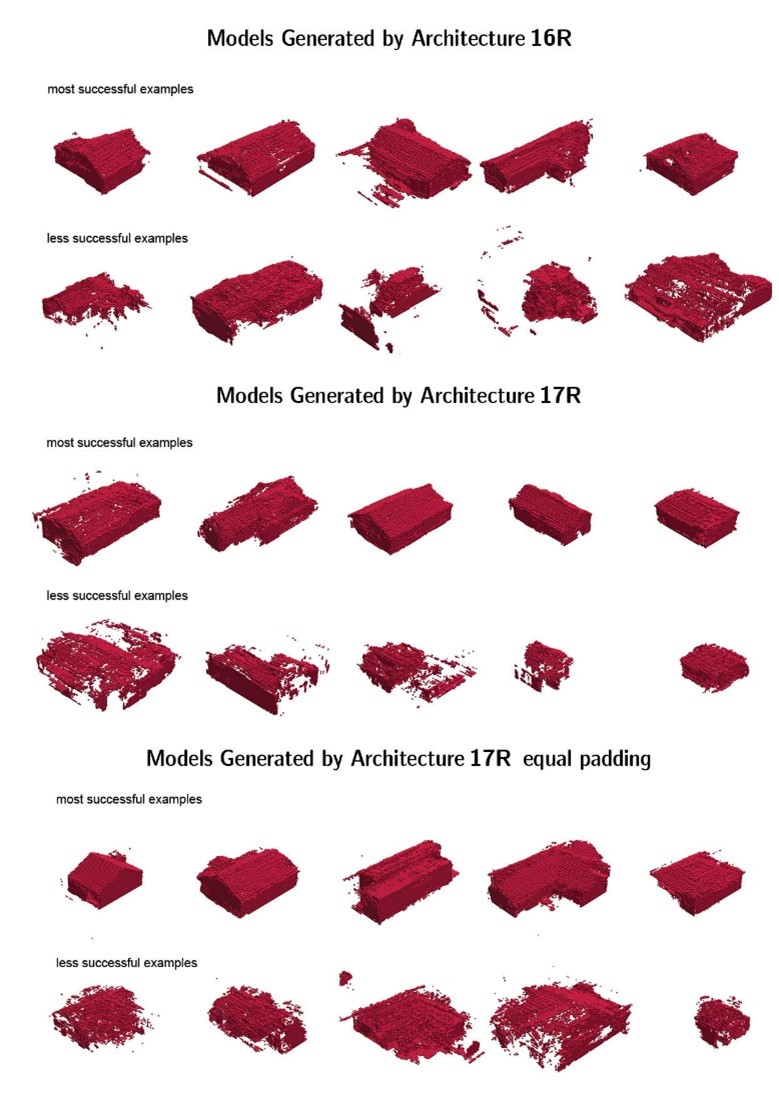

- Systematically reviewing and adjusting hyperparameters to develop a new GAN architecture that successfully creates 3D building geometry

- Adjusting the number of layers and channels to reduce noise in the output

Summary

The following conclusions were reached:

Key Hyperparameters: The most successful architecture used Wasserstein Loss with Gradient Penalty, Leaky ReLU in the Generator and the Critic, and RMSProp with Learning Rate=0.00005.

Optimizers:

Two optimizers are tested. When using ADAM as the optimizer, architectures perform better when also using learning rate decay. When using RMSprop as the optimizer, architectures perform better with a set learning rate. In the experiments, RMSProp outperformed ADAM for this application, when comparing the output for size, shape, and proportion against the models in the dataset.

Scaling architectures:

It is determined that if architectures perform well compared to others when all architectures have few layers and channels, the same high-performing architectures also perform well when the depth and width of the network is scaled up. Starting with smaller networks aids in testing many architectures quickly. After identifying a well-performing architecture, adjusting the depth and width of the network can help to reduce the noise in the output. Additionally, increasing the layers and channels of a network can help to decrease the noise in the output. This should always keep in mind the balance between depth and width and the training data size.

LIMITATIONS: Due to data set availability, the training data set was quite small. It is necessary to scale up the approach with a much larger data set and also look at the opportunities to incorporate memorization rejection strategies.

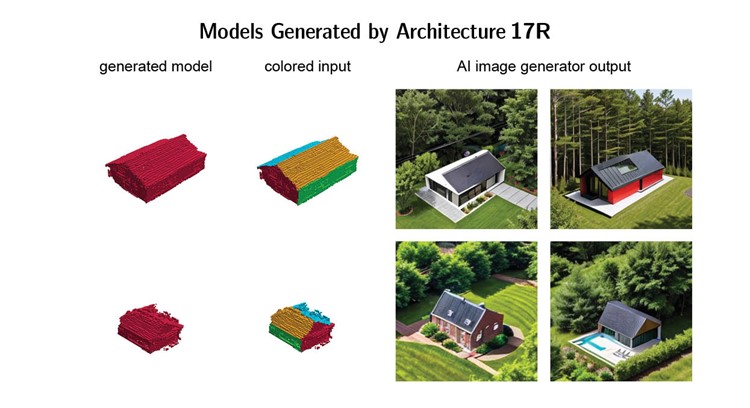

In the future, it is possible to imagine workflows that use different ML models for specific tasks. For example, starting with a generative model to create 3D building forms, then inputing this into an image generator to determine materials and design details.

Write your feedback.

Write your feedback on "Thesis: 3D GANs to Autonomously Generate Building Geometry – Mueller"".

If you're providing a specific feedback to a part of the chapter, mention which part (text, image, or video) that you have specific feedback for."Thank your for your feedback.

Your feedback has been submitted successfully and is now awaiting review. We appreciate your input and will ensure it aligns with our guidelines before it’s published.