Generative Models

-

Intro

-

Variational Autoencoder (VAEs)

-

Generative Adversarial Networks (GANs)

-

Flow Models

-

Diffusion Models

Information

| Primary software used | Jupyter Notebook |

| Primary subject | AI & ML |

| Secondary subject | Machine Learning |

| Level | Intermediate |

| Last updated | November 27, 2024 |

| Keywords |

Responsible

| Teachers | |

| Faculty |

Generative Models 0/4

Generative Models

Generative models are statistical models that calculates the probability distribution of a data set given observable variables (variables that can be directly observed or measured) and target variables (dependent variables). There are many different kinds of generative models including:

- Variational Autoencoder (VAEs)

- Generative Adversarial Networks (GANs)

- Flow Models

- Diffusion Models

Generative Models 1/4

Variational Autoencoder (VAEs)link copied

VAEs are an artificial neural network architecture that has two components: an encoder and a decoder. The first neural network maps an input to a latent space by layering the input with noise. The latent space corresponds to a variational distribution. This allows the encoder to produce multiple samples from the same distribution. The decoder than performs the sequence in reverse order. The decoder maps the latent space back to the input source. To train a VAE, the encoder and decoder are trained together. The application has been proven most successful for semi-supervised and supervised learning. VAEs are generally fast to train, but they do have limitations. The output that VAEs generate is usually low in resolution so their applications are limited to those where a high level of detail is not needed.

Generative Models 2/4

Generative Adversarial Networks (GANs)link copied

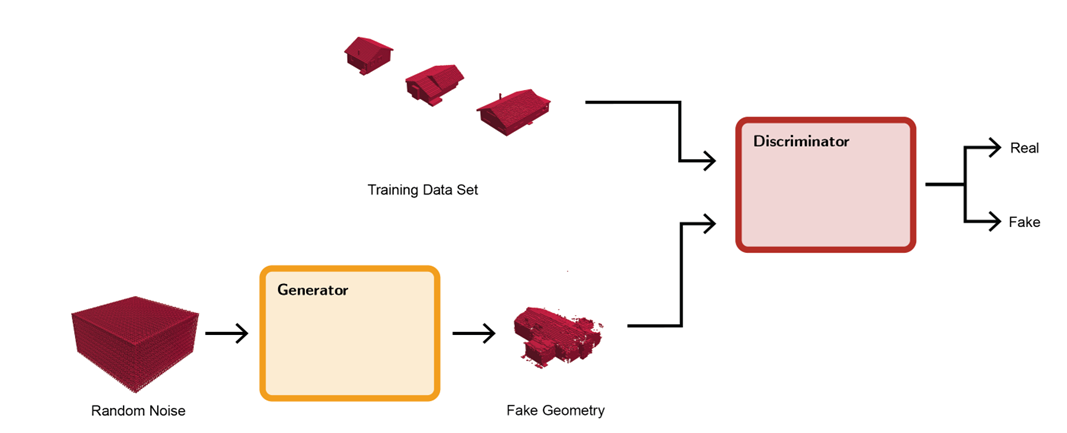

GANs [Goodfellow et al., 2014] consist of two different artificial neural networks (ANNs) that are trained to contest against each other in a zero-sum game. The generative network generates candidates based on the data set, while the discriminatory network evaluates if the candidate is real or fake. The image below shows an overview of a typical GAN model. The generator has one input, randomized noise, and it outputs the fake geometry. The discriminator learns characteristics of the data set and uses this information to output the probability that the fake geometry is real or fake. The generator is rewarded when it fools the discriminator and the discriminator is rewarded when it correctly classifies the geometry. Some examples of GANs include the early versions of text to image generators like DALL-E.

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. (2014). Generative Adversarial Networks. Advances in Neural Information Processing Systems,3.

Generative Models 3/4

Flow Modelslink copied

Flow models use a series of inverse transformation functions that map data to their latent representations. Each data point has a corresponding latent representation which is also the same shape as the input data. This allows the flow model to reconstruct the original data from the latent representation. Once the mapping between the input data and the latent space is learned by the model, it can apply the inverse to a input laten vector to produce an output. Flow models are reversable in parallel but require a lot of resources to train. They produce good quality outputs, similar to GANs, but have a significantly higher computational cost.

Generative Models 4/4

Diffusion Modelslink copied

Diffusion models began to gain popularity in 2020 when they replaced GAN models for image generation, for example with Dall-E2. Diffusion models have been shown to produce higher quality outputs, however they are also computationally intensive to train. Diffusion models work by transforming training data through the successive addition of Gaussian noise. This destroys the training data. Then, the models learn to reverse this process. Once trained, the input used is random noise and it applies the learned denoising process to this input to generate an image. Unlike VAEs and GANs, diffusion models generate samples one step at a time generating first a coarser, noisier image, and then focusing it and adding detail.

Write your feedback.

Write your feedback on "Generative Models"".

If you're providing a specific feedback to a part of the chapter, mention which part (text, image, or video) that you have specific feedback for."Thank your for your feedback.

Your feedback has been submitted successfully and is now awaiting review. We appreciate your input and will ensure it aligns with our guidelines before it’s published.